Prompt engineering is essential for effectively interacting with AI models, ensuring that the responses are accurate, relevant, and well-structured. Here are the key areas of focus in prompt engineering:

1. Clarity and Precision

- Use Clear Language: The prompt should be concise and free of ambiguity. Avoid using vague terms or language that can lead to multiple interpretations.

- Provide Specific Instructions: Specify exactly what you want the AI to focus on, including details on structure, style, and format, to reduce misinterpretation.

- Avoid Overly Broad Questions: Make the question or request as specific as possible to get more focused and relevant results.

- Example: Instead of asking, “What is AI?” you could ask, “Can you explain the differences between supervised and unsupervised learning in AI?”

2. Contextual Information

- Provide Context: To get more accurate responses, give background information or context relevant to the task or question.

- Roleplay Contexts: If necessary, you can instruct the AI to act in a certain role (e.g., “Pretend you are a business analyst,” or “Imagine you are writing for a technical audience”).

- Example: “As a marketing manager, explain how AI can enhance customer engagement through personalization.”

3. Constraints and Boundaries

- Set Limits: Instruct the AI on the length or depth of the response, format (bullet points, paragraphs, lists), or specific elements to include/exclude.

- Guide on Formality or Tone: Specify whether the response should be formal, casual, creative, technical, etc.

- Example: “Summarize the benefits of cloud computing in less than 100 words with a formal tone.”

4. Multi-Step Instructions

- Break Down Complex Tasks: If the task is complex, break it into multiple steps or give the AI sequential instructions.

- Prioritize or Emphasize Key Points: Use clear steps to ask the AI to focus on certain areas or solve a problem progressively.

- Example: “First, explain the concept of blockchain. Then, discuss its applications in the financial industry.”

5. Iterative Refinement

- Follow-Up Prompts: After receiving a response, refine or clarify with follow-up prompts to correct errors, get more detail, or change focus.

- Adaptive Prompting: Adjust the prompt based on the AI’s prior response. Prompt engineering can be iterative, where you gradually fine-tune the question or instruction.

- Example: If the AI gives a high-level answer, follow up with, “Can you provide a more detailed explanation of the technical process?”

6. Use of Examples

- Provide Examples: When asking for complex output, show a template or example of what the desired result looks like. This helps the AI to model its response more closely to your expectations.

- Demonstrate Desired Output: If you’re asking for a list, formula, or code, show an example to guide the AI.

- Example: “Create a Python function that calculates the factorial of a number. Example input: 5. Expected output: 120.”

7. Conditional Instructions

- Use Conditions to Direct Behavior: Provide the AI with conditional logic to create a dynamic response. For instance, “If X happens, respond with Y.”

- “If-Then” Structures: These help guide how the AI should behave based on different inputs.

- Example: “If the answer includes economic data, summarize it in bullet points. Otherwise, provide a paragraph explanation.”

8. Handling Ambiguity and Edge Cases

- Preempt Edge Cases: Address potential confusion or ambiguity directly in the prompt by specifying how to handle edge cases or unknowns.

- Fallback Instructions: Ask the AI to explicitly state when it doesn’t know something or if it’s unsure.

- Example: “If you don’t know the answer to a specific detail, just say ‘I’m not sure’ instead of making an assumption.”

9. Structuring the Output

- Request Specific Output Formats: For complex tasks, specify the structure of the output, such as lists, tables, code blocks, or sections.

- Labeling Sections: You can request that the AI divide the response into labeled sections (e.g., Introduction, Main Points, Conclusion).

- Example: “Summarize the following article in three sections: Key Findings, Methodology, and Conclusion.”

10. Incorporating Domain-Specific Language

- Use Domain-Specific Jargon: If the prompt involves a technical or specialized field, use terminology and language from that domain to ensure accurate and contextually appropriate responses.

- Instruct on Complexity Level: Specify the technical complexity or audience knowledge level (e.g., beginner, intermediate, expert).

- Example: “In simple terms, explain the basic principles of quantum computing to a non-technical audience.”

11. Prompt Framing with Bias Control

- Minimize Bias in Responses: Be aware of potential biases in the AI’s answers and guide it accordingly. You can provide neutral phrasing or clarify that the response should avoid any bias.

- Balance Perspectives: If you’re asking for opinions or explanations, instruct the AI to provide multiple perspectives on the issue.

- Example: “Provide both the advantages and disadvantages of using artificial intelligence in healthcare.”

12. Personality or Stylistic Adjustments

- Ask for a Specific Tone or Style: Instruct the AI to respond in a particular tone, such as professional, creative, technical, or conversational.

- Modify Voice or Character: You can tell the AI to respond as a particular character or person, useful in creative or role-playing contexts.

- Example: “Write a product description for a smartwatch as if you’re an enthusiastic tech blogger.”

13. Chaining Prompts Together

- Use Prompt Chaining for Complex Tasks: For more sophisticated interactions, you can chain multiple prompts together, where each subsequent prompt builds on the prior response.

- Example: First, ask the AI to explain a concept, then follow up with an application of the concept to a real-world scenario.

14. Negative Prompting

- Explicitly Exclude Unwanted Information: Negative prompting is asking the AI to avoid certain information or behaviors in its response.

- Example: “Explain machine learning, but do not discuss neural networks or deep learning.”

15. Testing and Iteration

- Test Prompts: Continuously test and modify prompts to improve the accuracy, relevance, and quality of the responses.

- Feedback Loops: Use the feedback loop from responses to refine prompts further.

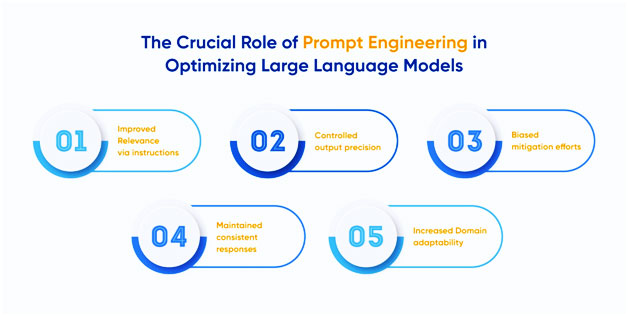

How does Prompt Engineering work?

Prompt engineering is the process of designing and refining prompts (inputs or questions) to guide AI systems, like large language models, toward generating the desired output. Since these AI models rely on the prompt for context, clarity, and instruction, prompt engineering involves understanding how to shape input in a way that maximizes the accuracy, relevance, and specificity of the response. Here’s how it works, broken down into steps:

1. Understanding the Capabilities of the AI Model

- Foundation Models: AI models are pre-trained on large datasets and can generate text, answer questions, summarize, translate, etc., based on the input they receive.

- Contextual Sensitivity: These models use the prompt to build an understanding of the context and determine how to generate an output that is aligned with the user’s request.

- Token-Based Generation: The AI generates text token by token (words or parts of words) based on the sequence and content of the input.

2. Crafting a Clear and Specific Prompt

- The more precise and clear a prompt is, the more likely the AI will generate a useful response. Vague or ambiguous prompts lead to generic or incomplete answers.

- Prompts can vary in complexity:

- Simple prompts: “Explain machine learning.”

- Detailed prompts: “Explain machine learning to a beginner, focusing on how it helps businesses improve decision-making, in less than 150 words.”

3. Breaking Down Complex Instructions

- For tasks requiring more detail, prompt engineering allows the user to break down instructions into steps or components. For example:

- Step-by-step task: “First, summarize the article. Then, explain the key findings.”

- Multi-part queries: “List the advantages of using AI in healthcare, then explain any potential risks.”

4. Iterative Refinement

- Often, a single prompt won’t give the perfect result, especially for more complex tasks. Iteration is key to refining prompts. If the initial output is unsatisfactory, the prompt can be adjusted by adding more details, setting constraints, or asking the AI to rephrase or clarify its response.

- First prompt: “What is AI?”

- Refined prompt: “What are the key differences between AI, machine learning, and deep learning in the context of business automation?”

5. Leveraging Context and Constraints

- Contextualizing the prompt with additional information can help the AI focus on the right subject matter. Constraints, such as word limits or specifying the desired structure of the output, also help guide the model’s response.

- Example with context: “As a data scientist, explain the importance of feature engineering in machine learning.”

- Example with constraints: “Summarize this research paper in no more than 100 words.”

6. Roleplaying and Conditioning Responses

- You can condition the AI to respond from a particular perspective, such as asking it to act as an expert in a specific domain or in a certain role. This technique helps tailor the response to the intended audience or format.

- Example: “Pretend you are a cybersecurity expert. Explain how phishing attacks work to a non-technical audience.”

7. Providing Examples

- Sometimes, providing examples within the prompt helps the AI model better understand the format or type of response expected.

- With example: “Write a brief product description for a smartwatch. Example: ‘This sleek and modern smartwatch features heart rate monitoring, GPS tracking, and water resistance, perfect for fitness enthusiasts.'”

8. Chaining Prompts for Complex Tasks

- Prompt chaining involves breaking down complex tasks into a series of simpler prompts, where the output from one prompt feeds into the next. This is especially useful for tasks that require multiple steps.

- Example chain:

- First prompt: “Summarize the benefits of AI in retail.”

- Follow-up prompt: “Now explain how retailers can implement AI in their supply chain management.”

9. Handling Ambiguity and Uncertainty

- Sometimes, prompts may include ambiguous instructions. Prompt engineering helps reduce ambiguity by explicitly stating what should be avoided or how the model should handle unknowns.

- Example: “If you’re not sure of the answer, respond with ‘I don’t know’ rather than guessing.”

10. Using Conditional Instructions

- Prompt engineering allows the use of conditional logic in prompts, directing the AI to behave in a certain way based on certain criteria.

- Example: “If the customer asks about pricing, direct them to the sales team. Otherwise, provide information about our product features.”

11. Structuring Output with Format Requests

- The output can be structured according to the request, such as asking for lists, tables, paragraphs, or sections. This helps when working with specific formats or requirements.

- Example: “Create a pros and cons list for using cloud computing in small businesses.”

- Another example: “Provide a step-by-step guide on how to install this software.”

12. Negative Prompting

- In some cases, you want to prevent the AI from generating specific types of content. Negative prompting explicitly states what should be excluded from the response.

- Example: “Explain the benefits of AI but do not discuss machine learning or neural networks.”

13. Testing and Optimizing Prompts

- Once a prompt is designed, it can be tested and optimized by tweaking the phrasing, adding more details, or breaking the task into smaller parts. This is often done through an iterative process where different versions of the prompt are tested to find the best approach.

14. Limitations and Challenges

- Context Window: The AI model has a limited “context window” that determines how much input it can process. Prompt engineers must ensure the prompt and any relevant background information fit within this window.

- Bias and Hallucination: AI models sometimes introduce bias or “hallucinate” information, making it important to phrase prompts in ways that reduce bias or explicitly avoid generating made-up content.

Example of Prompt Engineering in Action

Initial Prompt:

- “Explain blockchain.”

This prompt is likely to result in a generic explanation of blockchain.

Refined Prompt:

- “Explain how blockchain technology works in the context of supply chain management, focusing on its ability to enhance transparency and reduce fraud.”

This refined prompt gives the model context (supply chain management), a focus (transparency and fraud reduction), and a more specific request that results in a tailored response.

Conclusion

Prompt engineering works by crafting the input to ensure the AI understands the user’s intent clearly and responds appropriately. It’s about leveraging the AI’s capabilities while minimizing ambiguity, ensuring relevance, and iterating to refine the quality of the output. The process often involves specifying the context, output format, handling conditions, and providing examples to guide the AI toward the desired result.